Comprehensive Guide To Azure Data Engineering

Introduction

In today's data-driven world, the ability to store, process, and analyze large volumes of data is crucial for businesses. This guide provides a comprehensive understanding of various Azure data engineering services, how they build upon traditional data systems like Hadoop, and how they integrate to form robust data solutions in the cloud.

Table of Contents

Understanding Big Data and Data Engineering

Big Data refers to extremely large datasets that are complex and grow at an exponential rate, making them difficult to process using traditional data processing applications.

Data Engineering involves designing and building systems that allow for the collection, storage, and analysis of big data. It focuses on the practical applications of data collection and analysis, ensuring that data is accessible and usable for data scientists and analysts.

Introduction to Hadoop and HDFS

What is Hadoop?

Hadoop is an open-source framework that allows for the distributed processing of large datasets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage.

Hadoop Distributed File System (HDFS)

HDFS is the primary storage system used by Hadoop applications. It is optimized for storing large files and streaming data patterns.

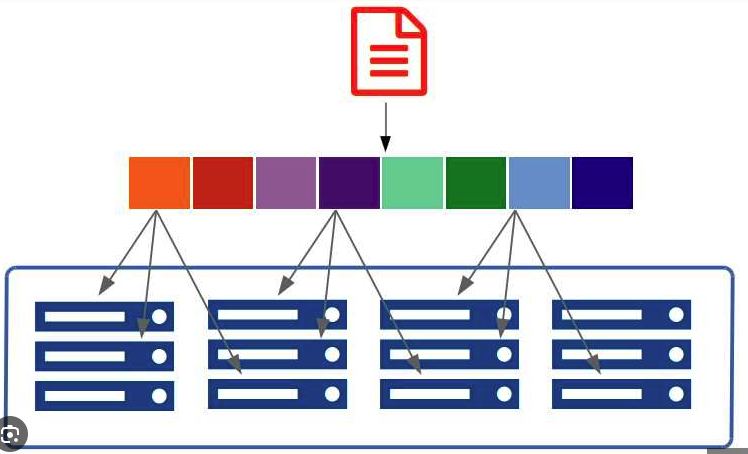

How HDFS Works

- Data Blocks: Files are divided into fixed-size blocks (default is 128 MB).

- Data Distribution: Blocks are distributed across multiple nodes (computers) in a cluster.

- Replication: Each block is replicated on multiple nodes to ensure reliability and fault tolerance.

- Parallel Processing: Jobs are divided into tasks that operate on individual blocks, allowing for concurrent execution across nodes.

Example:

- An

emp.txtfile of 600 MB is divided into 5 blocks (each 128 MB).

- Blocks are stored on different nodes in the cluster.

- A query like

SELECT SUM(salary) FROM empis executed in parallel on each block.

Diagram: HDFS Data Block Distribution

illustrating how HDFS divides a file into blocks and distributes them across nodes.

Advantages of HDFS

- Scalability: Easily add more nodes to the cluster to increase storage and processing capacity.

- Fault Tolerance: Data is replicated across multiple nodes; if one fails, data can be retrieved from another.

- High Throughput: Supports large data sets and provides high data access speeds by parallel processing.

- Flexibility: Can store and process structured, semi-structured, and unstructured data.

Limitations of HDFS

- Complexity: Requires expertise to set up and manage clusters.

- High Initial Costs: Physical infrastructure (servers, storage, networking) can be expensive.

- Inefficient for Small Files: Optimized for large files; storing many small files can reduce efficiency.

- No Native Support for ACID Transactions: Lacks built-in mechanisms for enforcing data integrity constraints like referential integrity.

The Shift to Cloud-Based Solutions

Benefits of Cloud Computing

- Cost Efficiency: Pay-as-you-go pricing models eliminate large upfront costs.

- Scalability: Resources can be scaled up or down based on demand.

- Managed Services: Cloud providers handle infrastructure management, updates, and maintenance.

- Global Accessibility: Services are accessible from anywhere with an internet connection.

- Reliability: High availability and disaster recovery capabilities are built-in.

Overview of Azure Data Engineering Services

Azure provides a suite of services designed to address various aspects of data engineering:

- Azure Data Lake Storage (ADLS)

- Azure Synapse Analytics

- Azure SQL Database

- Azure Data Factory (ADF)

- Azure Databricks

- Azure Cosmos DB

- Azure Stream Analytics

- Azure Key Vault

Azure Data Lake Storage (ADLS)

What is Azure Data Lake Storage?

Azure Data Lake Storage is a scalable and secure data lake for high-performance analytics workloads. It combines the power of a file system with massive scale and economy to help speed time to insight.

Features of ADLS

- Unlimited Storage: Scales to petabytes and exabytes of data.

- All Data Types Supported:

- Structured: Relational data, CSV files.

- Semi-Structured: JSON, XML.

- Unstructured: Images, videos, audio files.

- Optimized Performance: Designed for high-throughput, parallel processing.

- Hierarchical Namespace: Provides a filesystem-like structure for data organization.

- Security: Integrates with Azure Active Directory for access control.

Comparing HDFS and ADLS

Similarities:

- Both are distributed file systems designed for large-scale data storage.

- Support for parallel processing and high throughput.

- Capable of handling various data types.

Differences:

- Management:

- HDFS: Requires on-premises infrastructure management.

- ADLS: Fully managed by Azure.

- Scalability:

- HDFS: Limited by physical hardware.

- ADLS: Virtually unlimited scalability.

- Integration:

- ADLS: Seamless integration with other Azure services.

Diagram:

Limitations of ADLS

- No Referential Integrity: Cannot enforce relationships (like foreign keys) between different data entities stored as files.

- No Native ACID Transactions: Lacks built-in support for atomicity, consistency, isolation, and durability properties.

Azure Synapse Analytics

Introduction to Azure Synapse Analytics

Azure Synapse Analytics is an integrated analytics service that accelerates time to insight across data warehouses and big data systems. It brings together enterprise data warehousing and big data analytics.

Components of Azure Synapse Analytics

- Azure Data Lake Storage Gen2:

- The foundational storage layer for Synapse.

- Synapse SQL Pool:

- A dedicated SQL pool offering MPP capabilities for data warehousing.

- Synapse Spark Pool:

- Provides Apache Spark for large-scale data processing.

- Synapse Pipelines:

- Data integration and orchestration tool similar to ADF.

Diagram:

Use Cases of Azure Synapse Analytics

- Enterprise Data Warehousing: For storing and querying large volumes of structured data.

- Big Data Analytics: Process and analyze big data using Spark.

- Data Integration: Ingest, prepare, and manage data with pipelines.

- Unified Analytics Platform: Combines data warehousing and big data analytics.

Azure SQL Database

What is Azure SQL Database?

Azure SQL Database is a fully managed relational database service based on the latest stable version of Microsoft SQL Server. It provides high availability, scalability, and security.

When to Use Azure SQL Database

- OLTP Workloads: Ideal for transactional applications that require rapid response times.

- Scalability Needs: Automatically scales compute and storage resources.

- Managed Service Preference: Eliminates the need to manage hardware, operating systems, and databases.

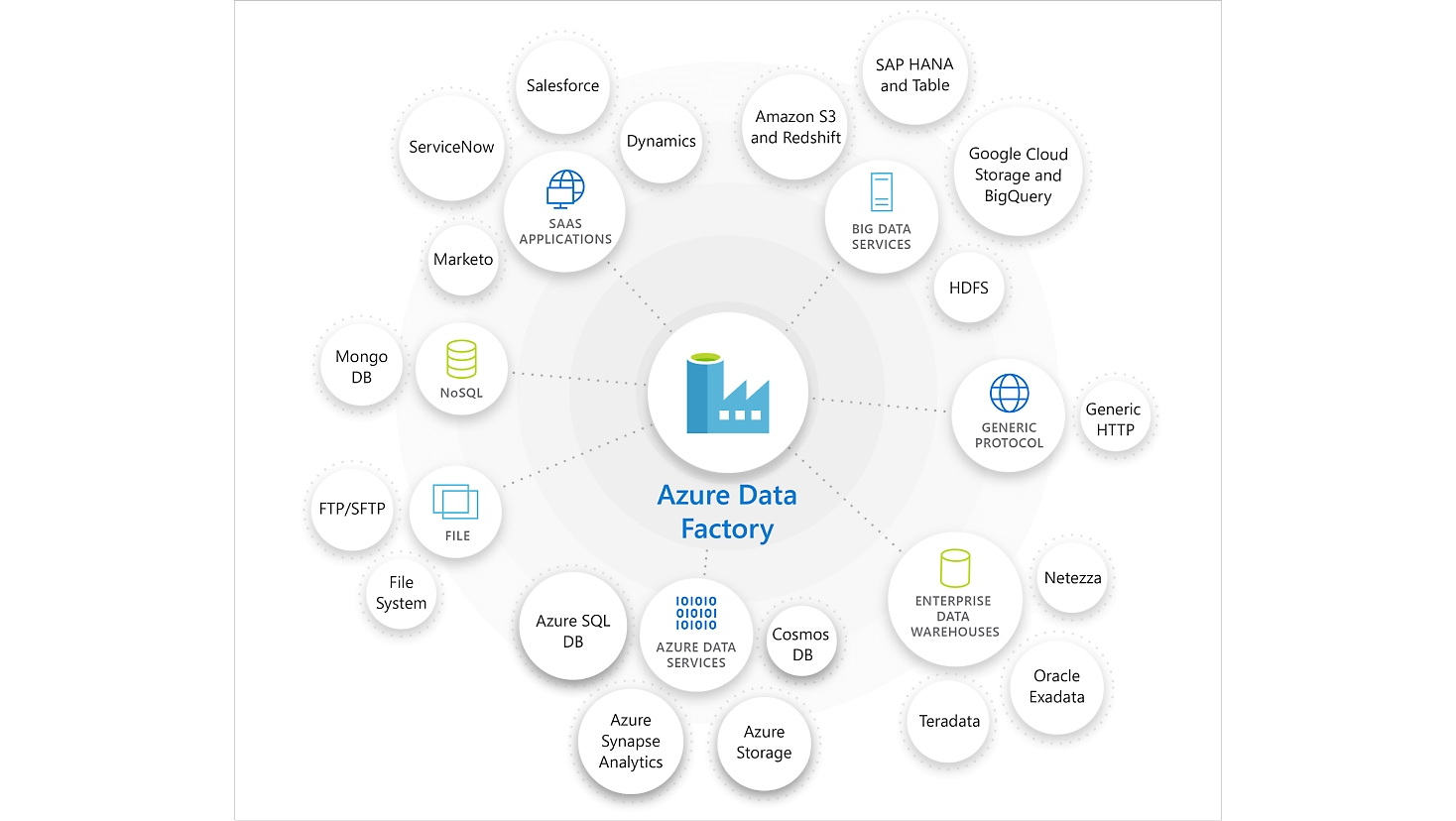

Azure Data Factory (ADF)

Introduction to Azure Data Factory

Azure Data Factory is a cloud-based data integration service that orchestrates data movement and transformation. It enables the creation of data-driven workflows for orchestrating data movement and transforming data at scale.

ETL vs. ELT

- ETL (Extract, Transform, Load):

- Data is extracted from sources, transformed before loading into the target system.

- Best for: Small to medium datasets.

- ELT (Extract, Load, Transform):

- Data is extracted and loaded into the target system, and then transformed.

- Best for: Large datasets where the target system can efficiently handle transformations.

Features of ADF

- Code-Free User Interface: Build workflows without writing code.

- Broad Connectivity: Supports a wide range of data sources and destinations.

- Scalability: Designed for both small and large data volumes.

- Data Transformation: Perform transformations using data flows.

- Scheduling and Monitoring: Schedule pipelines and monitor their execution.

ADF Data Flows

- Definition: Visual data transformation tool within ADF.

- Capabilities:

- Perform complex transformations without code.

- Scales using Spark under the hood.

- Limitations:

- Node Limit: Can use up to 256 nodes.

- Data Type Support: Supports structured and semi-structured data but not unstructured data.

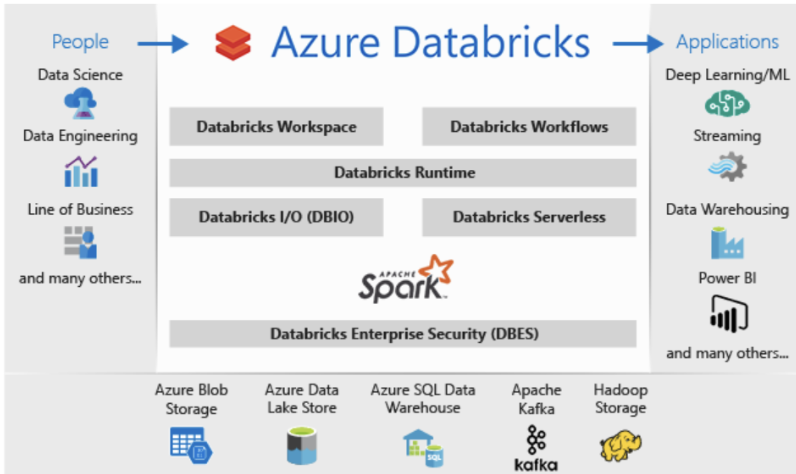

Azure Databricks

What is Apache Spark?

Apache Spark is an open-source distributed computing system that provides an interface for programming entire clusters with implicit data parallelism and fault tolerance.

Azure Databricks Overview

Azure Databricks is a fast, easy, and collaborative Apache Spark-based analytics platform optimized for Azure. It simplifies the setup and management of Spark clusters and integrates tightly with Azure services.

Features of Azure Databricks

- Auto Scaling Clusters:

- Automatically adjusts cluster size based on workload.

- Cluster Modes:

- Fixed Size: A set number of nodes.

- Auto Scaling: Scales between minimum and maximum nodes.

- Example:

- Cluster Name: DbricksAutoScale

- Min Nodes: 2

- Max Nodes: 10

- Delta Lake:

- Description: An open-source storage layer that brings ACID transactions to big data workloads.

- Benefits:

- Ensures data reliability and consistency.

- Enables the Lakehouse architecture.

- Auto Loader:

- Automatically processes new files as they arrive in data lakes.

- Supports schema inference and evolution.

- Unity Catalog:

- Centralized governance for data and AI assets.

- Fine-grained access controls and metadata management.

- DBUtils:

- Utilities for interacting with the Databricks file system (DBFS), notebooks, secrets, etc.

- Delta Live Tables:

- Simplifies building and managing data pipelines.

- Supports continuous or scheduled data processing.

Diagram: Azure Databricks Architecture

Diagram of Azure Databricks architecture showing clusters, notebooks, and integration with storage services.

Azure Cosmos DB

Introduction to Azure Cosmos DB

Azure Cosmos DB is a globally distributed, multi-model database service. It offers turnkey global distribution, elastic scaling of throughput and storage, guaranteed low latency, and comprehensive SLA-backed availability.

When to Use Azure Cosmos DB

- Global Distribution: Applications that require data to be accessible globally with low latency.

- Flexible Data Models: Supports document, key-value, graph, and columnar data models.

- High Throughput and Low Latency: For applications demanding fast response times.

- NoSQL Requirements: When a schema-less database is needed for rapid development.

Other Azure Data Services

Azure Stream Analytics

- Description: A real-time analytics and complex event-processing engine designed to analyze and process high volumes of fast streaming data.

- Use Cases:

- IoT data processing.

- Real-time fraud detection.

- Social media analytics.

Azure Key Vault

- Description: A secure store for secrets, keys, and certificates.

- Use Cases:

- Safeguarding cryptographic keys and secrets used by cloud applications.

- Centralizing secrets management.

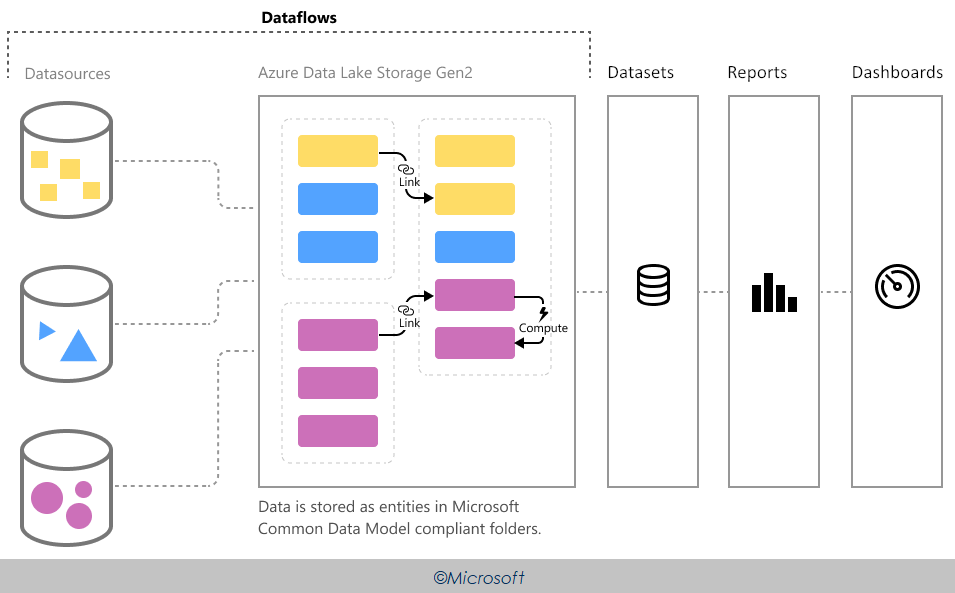

Integrating Azure Data Services

Creating robust data solutions often involves integrating multiple Azure services to handle various aspects of data engineering:

- Data Ingestion:

- Use Azure Data Factory to extract data from various sources and load it into Azure Data Lake Storage.

- Data Processing:

- Use Azure Databricks or Synapse Spark Pool for processing and transforming data.

- Data Storage:

- Store processed data in Azure Synapse SQL Pool for structured querying.

- Use Azure Cosmos DB for globally distributed, NoSQL data storage.

- Data Analysis and Reporting:

- Utilize Azure Synapse Analytics for data warehousing and advanced analytics.

- Connect to Power BI for data visualization.

- Data Orchestration:

- Orchestrate workflows and data movement using Azure Data Factory or Synapse Pipelines.

- Security and Management:

- Store secrets and manage access using Azure Key Vault.

Diagram: Azure Data Services Integration

End-to-End data pipeline diagram showing how these services integrate.